Earlier this year, we asked the awesome /r/BigSEO redditors (opens in a new tab) to tell us about the most embarrassing SEO fails they've ever witnessed. There were two prizes: one for the SEO fail that received the most upvotes, and one that was hand-picked by the BigSEO moderators (not us). We received a lot of great submissions, which we'll be covering in this article. Where needed, we reached out to the submitters to get additional background information on the SEO fail so we could get it right.

Why did we run this contest?

Because openly talking about our SEO fails gives others the ability to learn from them. There's a taboo here that we're trying to break.

How are we trying to break this taboo?

By putting it out in the open. We've previously written about our own SEO fails from back when we ran an agency, and about the SEO fails that some of the leading SEO experts have witnessed.

Learn from these SEO fails, do what you can to prevent them from happening to you, and make sure you're prepared when disaster strikes. If there's something we can be sure of, it's that disaster will strike. We all mess up. But we've made it our mission to minimize the messes.

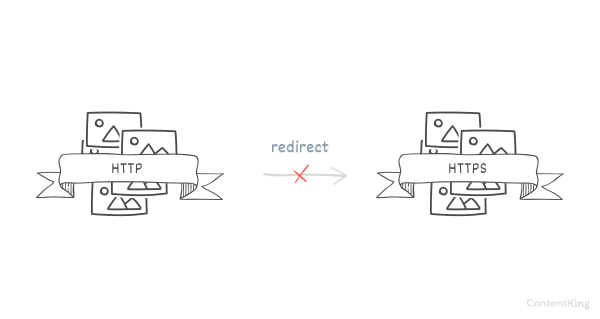

Forgot to migrate image URLs when migrating to HTTPS (WINNER!)

By PPCInformer (opens in a new tab)

What happened?

Image traffic was roughly 60-70% of search traffic on the site. The images on the site were well indexed and a few years ago while I was handling the SEO, there were talks of taking the site HTTPs. It was in the planning phase but the developers migrated the image URLs from HTTP to HTTPS in production. As you can imagine traffic tanked.

How long did it take before you noticed it?

A couple of weeks after the change there was a sharp decline in organic traffic. Thankfully Search Console allows you to see organic traffic by Web, Image, Video which helped me identify the issue.

What did you do to prevent it from happening again, or find out quicker in the future?

More education and all URLs changes needs to go through the SEO team.

From a process standpoint, it's important to always monitor your rankings and organic traffic for any anomalies. Set up alerts for any sharp declines in either your rankings or your organic traffic.

From an organizational standpoint, make sure that everyone who's involved in your SEO matters has a basic understanding of SEO and knows when to consult with experts.

Accidentally merged three domains into one

By mrseolover (opens in a new tab)

What happened?

At my agency we had a client with a WPML set up, with the following TLDs .de, .ch and .com. We had some issues with redirects from non-www to www, so I asked our developers to look into that. Anyway, after an hour or so one of our devs reported back that it should all be working so we crossed it off and continued with the rest of the day. It turned out the devs had messed up the redirect config and accidently redirected (opens in a new tab) the .ch and .com to the .de!

How long did it take you before you noticed it?

We found out 2 days later because the client and us were only just checking the German site regularly.

What did you do to prevent it from happening again, or find out quicker in the future?

We now have a more extended process for post-release checking but honestly, it's still up to a person to execute it. It's not ideal I know but we're improving it.

Set up monitoring for your websites with ContentKing, so you get notified right away about key pages being redirected (or removed, or returning server errors).

Aside from that, always test whether your work is really working as expected.

Canonicalization gone wrong

By Maizeee (opens in a new tab)

What happened?

I have a website with thousands of pages (kind of like a directory) and had that one specific page that I wanted to make canonicalize to another page (tell Google it's the same content).

I coded it quick and dirty for that one page, my PHP approach was an

if (page = 'pagename') { add rel=canonical }

Well f*ck, I missed one equal sign ((page = 'pagename'} is always true), made 100% of my pages rel=canonical to just one page. Google hated/loved it, deindexed almost my entire website.

How long did it take you before you noticed it?

It took me about one week to notice the disaster with a significant user-drop and it was a very slow recovery process.

What did you do to prevent it from happening again, or find out quicker in the future?

Since that day I am paranoid at checking my source code for rel="canonicals".

Set up monitoring for your websites with ContentKing, so you get notified right away about key pages being redirected (or removed, or returning server errors).

Aside from that, always test whether your work is really working as expected.

Content pruning gone wrong

By DM_Kevin (opens in a new tab)

What happened?

We wanted to do content pruning on a blog but couldn't assign a meta robots noindex tag to every low-quality page. A developer suggested using the robots.txt (opens in a new tab) instead, which we did.

So, we copy-pasted around 30% of all URLs into the robots.txt to exclude them from being crawled and raise our quality level on the blog. Days pass by.

Suddenly, I get angry messages from colleagues, asking why they can't find their articles on Google anymore. O_O

Turns out, when you don't add a $ at the end of a page, robots.txt will exclude everything coming after the URL (even if there's no trailing slash). $ = stop (in Linux)

Of course we added the $ signs and fixed the problem. Sweaty days, tho!

How long did it take you before you noticed it?

Unfortunately, we didn't get any input on this.

What did you do to prevent it from happening again, or find out quicker in the future?

Unfortunately, we didn't get any input on this.

Monitor your robots.txt with ContentKing, so you get notified right away about robots.txt changes, and always make sure that your changes aren't making your pages inaccessible to search engines. There's a check for this in ContentKing, but be sure to also use Google Search Console's Robots.txt tester to verify your robots.txt directives.

The wrath of duplicate content back in 2007

By vinautomatic (opens in a new tab)

What happened?

It was late 2006, early 2007, pre iPhone, and Facebook hadn't really taken off yet and Search Marketing and MySpace was still all the rage. I had started freelancing doing websites and got asked about SEO project for a brother's website that I built for him in plain HTML/CSS in Dreamweaver. Can't be too hard right? He was telling me how he was banned as a DMOZ admin for promoting his site and a little bit about the rudimentary industry.

The project was for his Credit Card Processing website and he was a reseller for Merchant Services Inc, whom more recently was bought out by HarborTouch. I just learned about SEO the year prior for a Travel Agency I was working for and did some website scans to point out things to their marketing head (as I was just an Office Admin/IT/Tech Support guy for their 50 agents)

I didn't know that much about SEO at the time, and SEOBook.com was my bible as well as a few others. I knew how to implement titles (opens in a new tab), descriptions, even the keywords tag was still a thing, H1s (opens in a new tab), bolding, and other general SEO checklist items. We also added good content and more (before that was a fad). I was using tools like WebCEO and Internet Business Professional (IBP) to scan my site and give me actionable items to improve which was an easy way to get some business citations.

That was my first fully rounded SEO campaign. Guess what? I got the website #2 or #3 on Google for "merchant services" and "merchant accounts" - a niche with big competitors such as PayPal and others such as the parent company of my brothers, who had the exact match domain and #1 spot for "merchant service". What a stroke of luck and hard work!

Then we wanted more. "How about we start an AdWords campaign?" my brother asked, wanting even more leads. I had a little bit of knowledge of it helping with another account, and took it on. Then he asked to duplicate the website on a different domain so we could measure the results and keep it separate from our organic results. Sure, makes sense right?

NO. WRONG. WRONG!

So I launch the new website and turn on the ads for just a few keywords that we think work, and starting the campaign off slowly. A few days later.

Here was that conversation in a much more toned down, vulgarless, fashion:

Brother: "My website! It's not on the first page, I can't even find it anywhere, even 10 pages down!"

Me: "I don't know what happened."

A couple Google searches and few hours later and after asking around some forums, I figured it out. Duplicate content (opens in a new tab). We got penalized by Google. I felt like I was in jail. Even after taking the duplicate website down and redirecting it, we never got those coveted rankings back.

How long did it take before I noticed it?

Only 1-2 weeks.

What did you do to prevent it from happening again, or find out quicker in the future?

Properly use Google Analytics and AdWords conversion tracking for leads to segment what is coming from organic search vs paid search traffic.

If you're going to copy an entire website, make sure it's non-indexable, by using the noindex robots directive. Should the "copy-website" receive backlinks, you can then think about canonicalizing the copy-domain to the original domain.

Merging of websites gone wrong

By axelhansson (opens in a new tab)

What happened?

Agency A recently bought Agency B, both in big players on SEO and SEM in general. By doing that they were boasting about becoming the biggest and best digital marketing agency.

Now Agency X (A and B combined) had some great plans on growing their website. Beforehand they asked us, the people actually working in Digital Marketing, what would be the best way to merge several websites into one.

We gave them a lot of feedback on their plans, which was basically putting everything on subdomains (bloody hell no!), and that they had to manage redirects and so on.

And.. suddenly it was released without listening to us. They just did their own thing.

Right now it's a mismatch of zero SEO, thin content and a huge mix of subdomains wrecking havoc (using different subdomains for different services). To make things worse, they not only merged the two websites but they also messed up another internally migration which was included in the same release.

At my new job we look at the migration and use it as an example on "How to not do it."

This could've been easily preventable by taking the advice from its staff instead of hurrying with everything and releasing an inferior website. The worst part is them not fixing it even after shit hit the fan.

I'll leave you this screenshot from Ahrefs showing their decline:

How long did it take you before you noticed it?

Pretty soon we saw a sharp organic decline in traffic.

What did you do to prevent it from happening again, or find out quicker in the future?

We told the stakeholders to actually listen to people that know what they're talking about.

When consolidating websites, choose subfolders over subdomains, and always make sure you have a solid website migration plan in place. Oh, and listen to the SEO experts!

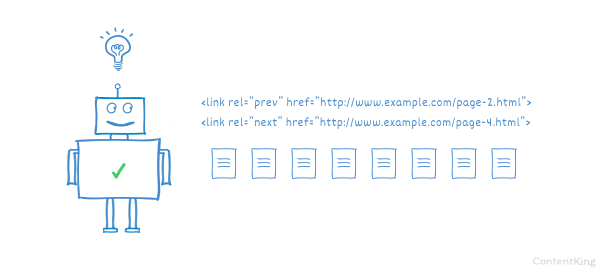

Pagination implementation gone wrong (WINNER!)

By Rankitect (opens in a new tab)

What happened?

Horror stories are common in migrations - site redesign, CMS changes, HTTPs switches etc. Most of us have experienced robots.txt & noindex directives (opens in a new tab) left behind and would have loved a more automated way to monitor all these changes.

One of the recent 'disasters' I recall is when someone from the client's marketing team (with the kind contribution of the development team) decided to make a fairly large and successful website (250-300k organic monthly visits) more 'user-friendly' by adding a Javascript-driven pagination on all listing results, which is where the majority of the revenue came from.

How long did it take you before you noticed it?

It took us around 2-3 days to catch this when we noticed the number of indexed pages dropped off a cliff, to a merely 40% over the next 2 weeks. This took around 3-4 months to recover completely.

What did you do to prevent it from happening again, or find out quicker in the future?

We now use keyword positional alerts and HTML change tracking, despite not 100% reliable.

When making changes to websites, always keep in mind that whatever you're doing, it has to work for BOTH visitors and search engines. When using Javascript, always make sure search engines can parse it or, if not, at least use a pre-rendering solution such as prerender.io.

Learn more about JavaScript SEO (opens in a new tab).

Search and Replace frenzy gone wrong

By Joshua Jarvis (opens in a new tab)

What happened?

This one isn't going to sound like SEO until you realize we switched platforms entirely for the SEO benefit.

I had a client that was on a controlled platform where all the URLs ended in .php and he wanted to migrate to WordPress. I was feeling very slick in my use of "find and replace" to take out 3rd party content and replace it with my own custom snippets. During this process I was so confident that I planned a cold email campaign to customers of this platform to encourage them to hire me to move them to WordPress.

Launch day arrives and of course one of the biggest challenges was having all these pages with .php on the end and now they needed to be redirected. I could have chosen some easier ways to do this but decided to use find and replace…

Well you can imagine what happened after I used that (and of course my client was checking it every minute because we were supposed to go live).

In any case, the entire site died because I had FOUND and REPLACED every single instance of .php that was found. In the end I had to revert it back to a version from a few weeks earlier.

How long did it take you before you noticed it?

Immediately as it happened.

What did you do to prevent it from happening again, or find out quicker in the future?

Lesson: Save, Save often, save some more.

PS: I still love "find and replace" though.

In web development, it's important to have a revision history, so you have a clear overview of the changes you're making—and so you can revert changes easily.

Wrong use of canonicalization

By victorpan (opens in a new tab)

What happened?

It was the middle of March and as the search agency of record for a brand, it was supposed to be an easy month. Budget plans were all sent out, kick-offs were all done, and we were just about to send out an refreshed keyword strategy document, that to be honest, looked like last year's but with trends and volumes updated. Enter the 40%+ organic traffic loss alert from Google Analytics (I'm probably conservative, my memory is hazy since the NDA expired).

The funny thing about agencies of record is that often times your clients will hire for a more specific role (e.g. technical SEO) but you have the authority to override them. Override them we did, because some smart crass decided to canonicalize content to the category folder. But wait. Why would you help a competitor who's screwing up when you're not even scoped to fix these competitors? You wouldn't. You'd talk to your client, tell them that someone else in the org messed up and here's the $ impact, based on the ppc value of lost organic traffic, and arm them with stats on how each day this wasn't being fixed, \(\)$ was being lost. Maybe you'll win that tech SEO contract next year.

So what was going on? Just a quick look at organic search data a week before the traffic fell and a week after, I was able to identify that someone decided to canonicalize all content in a particular category to the category page (oh irony, how'd that agency win that tech SEO contract?).

I was so glad it was a simple fix. That traffic dip could flip right back up as if it didn't happen (and it did) and in just two days the canonical URLs were removed. But of course this happened 2-3 months AFTER everything gets reported from the top down. You know, blazing angry emails, popcorn, and CC on CC to global teams on cc and meetings and then more cc.

How long did it take you before you noticed it?

The week after it happened.

What did you do to prevent it from happening again, or find out quicker in the future?

Well, you could fire that technical agency and hire someone more competent for starters.

You could automate change detection and increase the checking frequency with a web-based crawler instead of relying on Google Analytics to look at things by week. Finally, you could quit your job and not have to deal with waiting for things to get fixed and money thrown into a fire because you'd rather be doing something tangible in your life than to butt egos back and forth with others. BigSEO, you probably did all three.

Set up monitoring for your websites with ContentKing, so you get notified right away about your key pages being canonicalized.

Search in search wreaking havoc

By ramesh_s_bisht (opens in a new tab)

What happened?

We allowed our internal search pages to get indexed by Google and at the beginning, it was all smooth sailing. We were getting a steady flow of traffic from Google but suddenly we noticed we are getting traffic from 'adult-related search terms' and this is what triggered Panda (quality issues) for our site.

We realised this and blocked search engines from crawling our internal search pages and put noindex, nofollow on all those pages where content was offensive and the other pages were canonicalized to their category pages. It was kind of a bad phase where you see your traffic going down every day and you just wait for Google to reprocess and evaluate your current status based on freshly crawled pages.

Things were settled after a month and Google able to detect the correct canonicals on these pages and we again start gaining traffic from search results but this time it is going in the right direction i.e. categories.

How long did it take you before you noticed it?

We noticed it nearly after 1 months when one of such page start featuring in top landing pages as the page was started ranking for several keywords and collectively it becomes top traffic fetching page from the organic channel.

What did you do to prevent it from happening again, or find out quicker in the future?

Like I said, it is also best to keep an eye on your site performance and technical issues so that you can detect these issues on time. We did the weekly audits for technical issues and checking for top keywords/queries and landing pages to detect any suspicious page or keyword ranking which is questionable.

Having indexable search results is very tricky, as it can potentially generate lots of near-duplicate content. Always make sure to apply the noindex robots directive to search-results pages.

Massive amounts of duplicate content due to product variants

By thedorkening (opens in a new tab)

What happened?

I've been pushing for a new website since I started this job. I knew we had duplicate content issues but I didn't realize how bad it was until I needed to create 301-redirects (opens in a new tab) for every product.

Each of the 4,000 products have at least 12 duplicate pages, and some even well over 20.

Now that I'm building out our new site and combining multiple products into one, I have some pages that have hundreds of incoming 301-redirects.

Our overall rankings and traffic have been steadily going down. We are supposed to launch a new site in a month from now.

How long did it take you before you noticed it?

This was an ongoing issue actually.

What did you do to prevent it from happening again, or find out quicker in the future?

Implement canonical URLs to deal with duplicate content.

If the content for your product variants is highly similar, make sure to canonicalize the variants to the main product page.

Killed off income source with click fraud

By thefaith1029 (opens in a new tab)

What happened?

My biggest SEO mistake happened roughly 15 years ago and it's still relevant. It cut off a major income source for me as well.

I had built a Carrie Underwood fan website (hey I was a teen) and it was getting enough traffic that I decided to sign up for Google Adsense. I signed up started to earn a few pennies, but it wasn't enough for me at 18. So what did I do? I went ahead and started clicking on my own ads. Well, Google didn't take kindly to that. Rightfully so, banning my ass and penalizing my website.

It's been 15 years now and I still can't get a Google AdSense account. Sad Stuff.

How long did it take you before you noticed it?

Right away.

What did you do to prevent it from happening again, or find out quicker in the future?

I can't get a new Google AdSense account, but if I could I wouldn't be clicking my own ads anymore.

Play by the rules, especially if you're dependent on just one income source. Furthermore, it makes sense to diversify in terms of traffic sources and revenue sources. Don't put all your eggs in one basket.

Conclusion: minimize the risks of SEO fails

Could these SEO fails have been prevented? Most of them yes.

However, where there are people involved, mistakes will be made. People mess up.

What matters here is that you minimize the risks of SEO fails, and that you prepare for the times when they do happen.

Here's how:

- Follow best practices: follow the industry's best practices for what you're doing. Yes, we know they can be hard to determine, because everyone has their own take on SEO, but after doing a bit of research on the topic, you will find them.

- Processes in place: make sure you have processes in place for dealing with changes and migrations. For example, prepare checklists for all the things you need to double check before and after a release.

- Ability to revert: when disaster strikes, make sure you have the ability to revert your changes, so you can quickly go back to the situation where your SEO isn't crumbling.

- Consult with an expert: always consult with an expert if you're about to make a major change. Remember, it's better to be safe than sorry!

- Tooling: make sure to have the right tooling in place. And that includes ContentKing, to monitor your website for SEO issues and changes and to send you alerts when trouble comes. Why not give it a try?