Murphy's law applies even to SEO: things will go wrong.

We've written previously about what went wrong at our agency before we founded ContentKing and the kinds of SEO fails ContentKing catches on a daily basis.

Now we'll turn to some of the brightest SEO experts for interesting SEO fails they've witnessed.

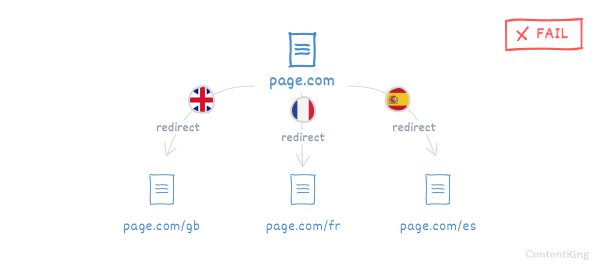

Location redirection gone wrong

Automatic IP redirects are a bad idea in almost all scenarios, but this particular one was especially catastrophic.

Barry Adams was hired by a client to bounce back from a failed website migration.

Here's what happened:

- His client moved from a country-specific domain name to a general domain name

- At the same time, they launched separate international sections of their site

So they went from domain.nl to domain.com/nl while also launching domain.com/de, domain.com/gb, and a few more.

They implemented hreflang tags (opens in a new tab), and redirects from the .nl to the .com were also in place. They thought they'd done all the necessary preparation and were good to go. They launched the new site, and their traffic just plummeted. It was a total disaster.

The issue was a relatively simple, but far too frequent one: they had an automatic redirect in place based on each visitor's IP address, where the visitor was immediately sent to the correct country version of the site.

A visitor from the Netherlands would be sent to domain.com/nl; a visitor from the United Kingdom would be sent to domain.com/gb, and so on.

This IP address based redirect was present on every single page on the site. If you were on a page on domain.com/nl, and you tried to go to domain.com/gb, you'd be redirected straight back to domain.com/nl.

Google crawls primarily from IP addresses in the US. The client's website didn't have a section for the US, so instead all traffic from the US was redirected to a landing page explaining that their service was not available in the US. So that page was the only page that Google ever saw. Stop for a second, and let that sink in.

All the other pages on domain.com were basically invisible for Google.

I'd avoid redirects by IP location in most cases; it's easy to break indexing & frustrate users.

— John ☆.o(≧▽≦)o.☆ (@JohnMu) June 20, 2017 (opens in a new tab)

Keep in mind that redirects by IP location are generally not the best way to solve a problem. If you do implement them, be very careful when implementing them, and make sure you run it all by an SEO expert before implementing it.

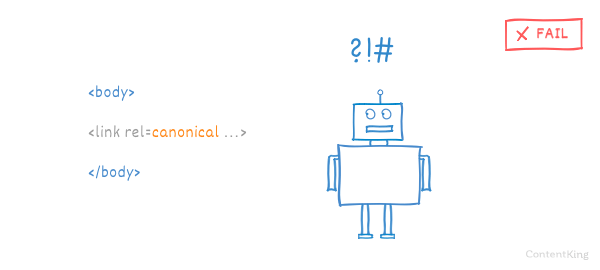

Canonicals in body instead of header

Due to an issue with a script the canonical URL (opens in a new tab) was thrown into the

body-section instead of thehead-section. You could only find out if you used Chrome Developer Tools to view the DOM tree.

Patrick Stox is known for his love for quirky SEO fails. And boy is this example quirky and hard to overlook! It's not from a client of his, but it's an example he analyzed in detail: because of an error, Home Depot's canonical url was placed in the body-section instead of the head-section.

Here's what happened: if you pull up the source code of the page, you'll see that the canonical URL looks fine.

However, if you use "Inspect" to view the DOM tree in Chrome Developer Tools, you'll find that the head-section of Home Depot's website breaks early, and the canonical tag is accidentally placed into the body-section due to a Javascript error. Google ignores canonical URLs that are defined in the body, and in this case Google just thought that no canonical URL was deployed.

So, what's the worst that could happen if all of your canonical tags are ignored?

Proactively monitor your website for SEO changes and issues. In case of trouble we'll alert you so you can prevent an SEO fail.

You won't have control over indexing of your preferred version or consolidation of signals.

Many pages will be indexed with the wrong URL, or you may have multiple versions of the same page indexed without consolidating its signals, and no version of that page will rank as high as it should. Read more on this in The Ultimate Guide to Duplicate Content (opens in a new tab).

Interestingly, the canonical tags seemed fine on Home Depot's mobile website. It's likely that one of the scripts they were calling on the desktop version of the site was causing the issue, but that problem would have resolved itself with the mobile-first index.

If Home Depot wanted to fix this quickly, they could probably get away with moving the canonical tag in the head-section so that it's above all the scripts, or they could figure out what's causing the head-section to close early (likely a tag that wasn't closed properly).

Note that Home Depot has fixed the issue since the it was published about by Patrick Stox.

(This SEO fail and others are covered on Search Engine Land (opens in a new tab)).

Keep a watchful eye on whether there are scripts that hurt the rendering of your pages. Look beyond the source code; look at what search engines see.

Agency site launched without consulting SEO expert

I got it written into company law that nothing went live unless it went through a process of being checked by the optimisation team.

A long time ago, I used to be Head of SEO at an agency. My main focus was client work, and one day I got an all staff email saying "Good news, our new site is now live!". While I had been involved in early conversations about the need for a new website, I hadn't heard it was actually being build and was going to be launched on this day.

I took a quick peek, and…my suspicions were confirmed: it wasn't exactly search engine friendly. In fact, it was a complete mess. I dropped a quick email to the person who had sent the email.

Me:

"Has anyone taken a look at the SEO of this site before it went live?"

His response:

"Oh don't worry, the developer says he knows SEO".

Now, don't get me wrong, there is an increasing number of developers who are expert in SEO, but this wasn't one of them. The biggest issues: all the title tags were the same, and content was often inaccessible for various reasons. Back then Google wasn't able to crawl JavaScript in the same way as it does today, in fact it was pretty notorious for making a mess of it. The internal links written predominantly in JavaScript only created an intricate spider trap. Fortunately, the developer (like most developers) was happy to listen and sit down with me. It took a couple of hours for it to be resolved, but I got it written into company law that nothing went live unless it went through a process of being checked by the optimisation team.

Sometimes a small disaster is good, because it can stop a massive one later and allows you to really get the right processes in place!

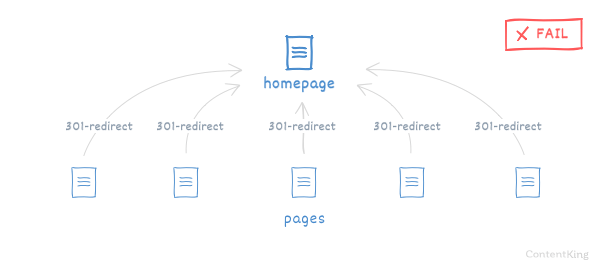

Let's redirect all URLs to the homepage

Within one week, those clever developers/SEOs completely destroyed the technical SEO of the royal family.

While Omi Sido wasn't involved in this migration, he analyzed it in detail and spoke about it at BrightonSEO in April 2017.

Here's what happened: the British royal website, previously running on royal.gov.uk, was migrated to a new domain name: royal.uk.

The SEO involved managed to 301-redirect (opens in a new tab) all URLs on the old domain to the homepage of the new domain. Yes, that's thousands of URLs being redirected to the homepage.

Within one week, this resulted in a traffic loss of 80%, as lots of pages that previously ranked well lost their authority. All links from other websites that were pointing to the old URL were now pointing to the new domain's homepage due to the 301-redirects.

In order for a website migration to go seamlessly, you need to prepare well and have a structured, well thought out approach. Part of that is a good URL mapping that describes what new URLs the old URLs should be redirected to.

Always make sure to neatly map your old URLs to new URLs, to make sure the migration is processed swiftly and correctly.

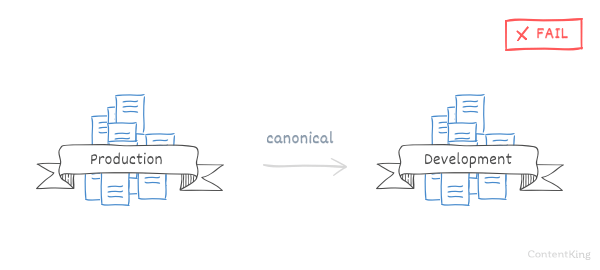

Canonicalization to development environment

Turns out the template used in production was set to default every page to a self-referencing canonical to the development environment – which is only accessible at the corporate HQ or through VPN.

Jenny Halasz has reported seeing a huge canonicalization issue at a large enterprise retail prospect she's in touch with.

Weeks ago, they launched two dozen new pages in a particular product category. They couldn't figure out why none of the newly published pages were being indexed, so they turned to her for help.

It turns out the page template used in production contained a static canonical URL pointing to the development environment on every page that wasn't indexed.

When the development team copied the template from the development environment to the production environment, they copied all files and kept the static canonical URL reference to the development environment in the template.

This meant that not only were all the pages canonicalized to a site that's invisible to the outside world (the development environment is only accessible via the company's internal network), but the pages weren't included in the sitemap either. This in turn meant that every new page—including a page containing a press release!—was canonicalized to the development environment.

Always double-check post-release whether your changes are correct. Make sure to have monitoring in place that picks up and evaluates your changes on the fly, giving you instant feedback.

Throwing the baby out with the bathwater using robots.txt

As this SEO fail is a particularly juicy one, our contributor has specifically asked not to be named.

Here's what happened: a website migration was done, with the homepage of the new website being available via www.website.com/home. The website owner didn't want the old homepage located at / to be crawled by Google, so they set up the following robots.txt (opens in a new tab):

User-agent: Googlebot

Disallow: /This directive blocks Googlebot from accessing the old homepage, plus the rest of the website.

It took them quite a while to figure out what the issue was, because they thought they had merely made the old homepage inaccessible. This SEO fail made their organic traffic plummet.

After a migration, search engine crawlers should keep full access to the old domain so that they can learn about the migration and can attribute link authority to the new URLs.

Blocking crawlers from accessing old domains using robots.txt

When migrating from one domain to another do not block crawlers from accessing the old domain. It delays and hurts the migration process.

This isn't just one example; unfortunately Patrick Stox has seen this happen many times.

Here's what happens: when people are migrating from an old to a new domain, they often block crawlers from accessing the old domain using their robots.txt file.

They think that this helps get the old pages out of the index quickly, but instead they're delaying and interfering with the migration process.

Proactively monitor your website for SEO changes and issues. In case of trouble we'll alert you so you can prevent an SEO fail.

Why?

Because search engine crawlers:

- aren't able to follow redirected URLs on the old domain, because they're not accessible anymore due to the robots.txt file. This means that these crawlers can't feed the old URLs' link authority to your new pages.

- won't explore the new domain quickly, because they essentially treat it as a brand-new domain.

Keep in mind that if you make parts of a website inaccessible to search engine crawlers, they won't be able to process those parts' contents. They won't know what's there if they're not allowed to see it.

Pagerank sculpting anno 2018

I was expecting 100% of their pages to be dropped from the Google index.

One of Jenny's clients went rogue and decided to implement an SEO tactic named "PageRank sculpting" that hasn't been effective for over a decade.

Here's what happened: the client wanted to control the distribution of link authority within their website, and put the nofollow attribute on every link except the homepage.

Very quickly, this resulted in 87% of their pages being dropped from Google's index. Nofollow doesn't necessarily mean pages will be dropped from the index, but if the only quality links pointing to a page are from internal sources (and you nofollow them), Google generally doesn't think enough of that page to keep it in the index. Jenny expected to see 100% dropped for this reason, but a few of them were good enough to remain on their own. Fortunately, removing the nofollow attributes also resulted in a quick restoration of the pages.

Be careful about the nofollow attribute—it's a powerful tool that should only be used on internal links in very specific cases such as a faceted navigation.

Preventing SEO fails

It's a pity these SEO fails happen, because they don't have to. In most cases, they can be prevented. We all think: "This isn't going to happen to me." And then it does happen, because of Murphy's Law. You need to do what you can to avoid SEO fails like these, and prepare for when disaster strikes. Here's how:

- Follow best practices: follow the industry best practices for what you're doing. Yes, we know they can be hard to determine, because everyone has their own take on SEO, but after doing a bit of research on the topic, you will find them.

- Processes: make sure you have processes in place for dealing with changes and migrations. For example, prepare checklists for all the things you need to double check before and after a release.

- Ability to revert: when disaster strikes, make sure you have the ability to revert your changes, so you can quickly restore the situation where your SEO is not crumbling.

- Consulting with an expert: always consult with an expert if you're about to make a major change. Remember, it's better to be safe than sorry!

- Tooling: make sure to have the right tooling in place. That means ContentKing to monitor your website for SEO issues and changes and to get alerted when trouble comes. Why not give it a try?