On July 1st, Google announced (opens in a new tab) that they were going to revamp the Robots Exclusion Protocol – 25 years after its birth.

This resulted in an extension to the Robots Exclusion Protocol (opens in a new tab) that was submitted to the Internet Engineering Task Force (IETF) on July 1st, 2019.

A robots.txt update was long overdue

It's an interesting development, one which was long overdue in my opinion. The internet has come such a long way since the REP was first created by Martijn Koster (opens in a new tab) all those 25 years ago.

I feel we have been lacking an updated standard for crawlers. One that can be applied to the modern web, but above all – one that decreases ambiguity on how crawlers need to deal with certain situations and how website owners can best communicate their crawling preferences to crawlers.

While the draft partly confirms things we already knew, such as for instance the max file size of 500 kibibytes, there were some really interesting gems included in the RFC that seem to have escaped many SEOs' attention, judging from the conversations on social media.

What's clear though: the robots.txt (opens in a new tab) is your crawl budget's Achilles' heel. Read on to learn why.

I'm happy to see the Google team respond to asks from the community (no "but" here). It's probably a good point in time because there are still some sites that use non-standard entries in robots.txt and it's one of the most important and powerful files for SEOs.

I strongly hope to see this momentum of transparency and reaction continue throughout other sections as well. The more transparent Google is, the better the web becomes.

The most important takeaways

While the news about this RFC itself has been covered already, nobody's done a deep dive yet and analyzed what this may mean for SEO. So here we go!

Allow and disallow directives

Google's way of interpreting allow and disallow directives is proposed as the default standard: the most specific match must be used, meaning the longest URI.

Example

User-agent: *

Disallow: /foo/

Allow: /foo/bar/The result: /foo/ is inaccessible, and so are all resources in this subfolder, except for /foo/bar/.

This is well documented, and Bing currently already interprets robots.txt this way, but it's interesting to see that Google's pushing for other search engines to adopt it as well.

Redirecting robots.txt

The crawler should follow at least 5 hops, and its rules must be followed in the context of the initial authority.

Example

-

https://example1.com/robots.txt301 redirects to -

https://example2.com/robots.txt301 redirects to -

https://example3.com/robots.txt301 redirects to -

https://example4.com/robots.txt301 redirects to -

https://example5.com/robots.txt301 redirects to https://example6.com/robots.txt

This results in https://example6.com/robots.txt's rules being applied to https://example1.com.

Furthermore, if more than 5 hops are used: crawlers may assume the robots.txt is unavailable – see the next section.

Unavailability status (4xx)

In cases where a robots.txt is unavailable and returns a 4xx error (opens in a new tab), crawlers may access any URI or choose to use a cached version of that robots.txt for up to 24 hours.

This means that after those 24 hours, crawlers are allowed to access all the resources on your site!

Why leave yourself vulnerable to SEO disasters? Start monitoring your robots.txt today.

Example

You've set up disallow rules to prevent search engines from accessing low-value content that has incoming links, and now for some reason your robots.txt has started returning the 404 status code without your noticing.

This results in that low-value content getting crawled, and potentially getting indexed too.

Unreachable status (5xx, timeouts and connection errors)

A direct quote from the RFC:

"If the robots.txt is unreachable due to server or network errors, this means the robots.txt is undefined and the crawler MUST assume complete disallow."

It's not that black and white though. There are a few different scenarios to consider:

Up until 30 days: no crawling

Crawlers must stop crawling and assume a complete disallow (Disallow: /). If your robots.txt remains unreachable, they will not crawl your site for 30 days.

After 30 days with a cached robots.txt copy available: resume crawling with previous rules

After your robots.txt has been unavailable for a reasonably long period of time (for example, 30 days) or longer, clients may assume it's unavailable and continue to use a cached copy of your robots.txt.

After 30 days without a cached robots.txt copy available: resume crawling without any restrictions

If there's no cached copy available, crawlers will assume there are no crawl restrictions.

Examples

You've been running your website for a few years now, driving thousands of visitors a month. All your pages work fine, but your robots.txt consistently returns 5xx errors.

This results in all crawling activities being paused for 30 days. New content and updated content won't be crawled during this time. Unless you're monitoring your robots.txt, it won't be easy to learn why crawling is paused and your crawl budget (opens in a new tab) has been reduced to zero.

After those 30 days, crawling will be resumed using the rules in the cached version of the robots.txt (if available).

Now another example:

Your site's robots.txt has been returning 5xx errors (opens in a new tab) for 28 days, and suddenly it returns a 200 OK. Upon the next request, it returns a 5xx error again. The 200 OK response that was received in between the 5xx responses "resets the timer", meaning that in theory, you could see paused crawling for 58 days (28 plus 30 days).

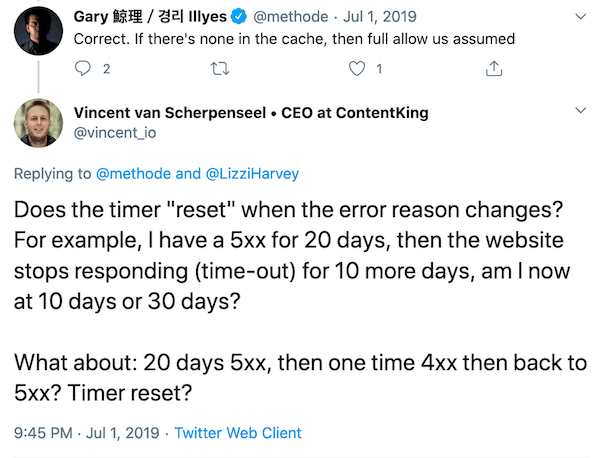

This tweet describes this:

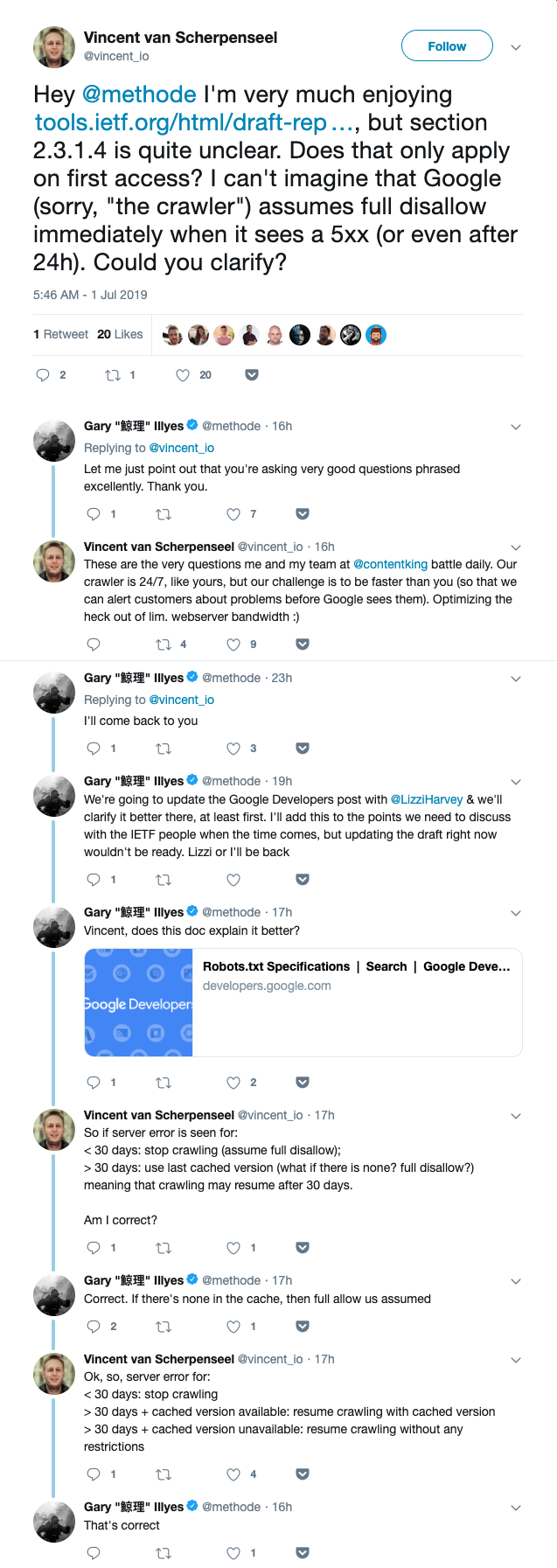

Feedback on the RFC

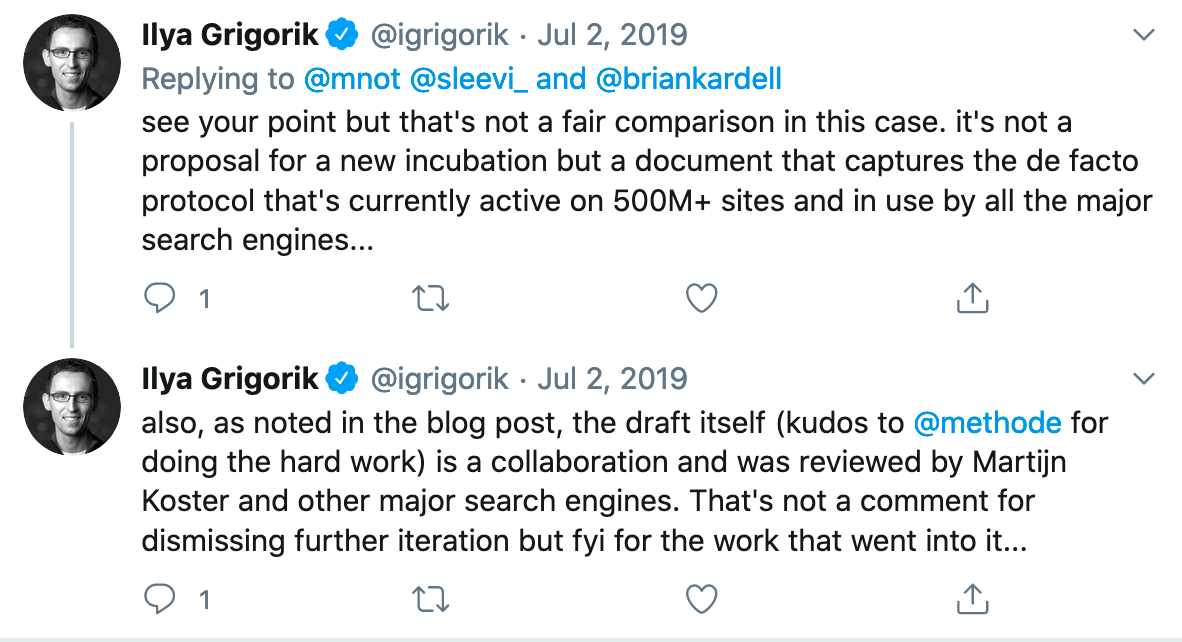

Google specifically asked for feedback from people who care about this topic, so my colleague Vincent van Scherpenseel joined that discussion and had an insightful exchange of tweets with Gary Illyes (and even got Gary and Lizzy Harvey to update Google's robots.txt documentation (opens in a new tab)):

If you have any feedback of your own, definitely do let the authors (opens in a new tab) know.

Support from other major search engines?

In this tweet, Ilya Grigorik mentions that the draft is being reviewed by "other major search engines" as well. This sounds good in theory, because search engines' interpretations of robots.txt vary.