Log File Analysis for SEO: An Introduction

Log file analysis plays an important role in SEO because they show search engine crawler’s true behavior on your site.

In this article, we’ll describe what log file analysis is, why it’s important, how to read log files, where to find them, how to get them ready for analysis and we'll run through the most common use cases!

What is log file analysis in SEO?

Through log file analysis, SEOs aim to get a better understanding of what search engines are actually doing on their websites, in order to improve their SEO performance.

Analyzing your log files is like analyzing Google Analytics data – if you don’t know what you’re looking at and what to look for, you’re going to waste a lot of time without learning anything. You need to have a goal in mind.

So before you dive into your log files, make a list of questions and hypotheses you want to answer or validate. For example:

- Are search engines spending their resources crawling your most important pages, or are they wasting your precious crawl budget on useless URLs?

- How long does it take Google to crawl your new product category containing

1,000new products? - Are search engines crawling URLs that aren’t part of your site structure (“orphaned pages”)?

Why is log file analysis important in SEO?

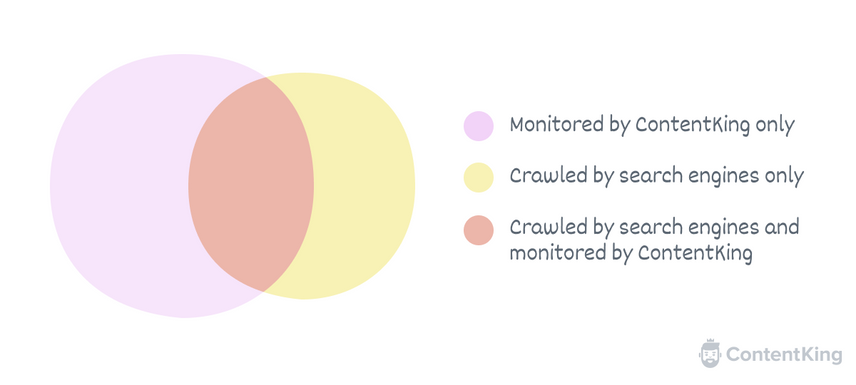

Since only log files show crawlers’ true behavior, these files are essential to understanding how they crawl your site. We often see this being the case:

Legacy crawlersCrawlers

A crawler is a program used by search engines to collect data from the internet.

Learn more, or even a monitoring platform like ContentKing, only simulate what search engines see; they do not provide a true reflection of how search engines crawl. And to be clear, Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more doesn’t tell you how they crawl either.

Using log file analysis, you can for example uncover important issues such as:

- Unfortunate search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more crawl priorities: your logs will show you what pages (and sections) get crawled most frequently. And – especially with large sites – you’ll often see that search engines are spending a lot of time crawling pages that carry little to no value. You can then take action and adjust things like your robots.txtRobots.txt

Robots.txt file is a text file that can be saved to a website’s server.

Learn more file, internal link structure, and faceted navigation. - 5xx errors: your log files help to identify 5xx error response codes, which you can then use as a starting point for follow-up investigations.

- Orphaned pages: orphaned pages are pages that live outside of your site structure – they have no internal linksInternal links

Hyperlinks that link to subpages within a domain are described as "internal links". With internal links the linking power of the homepage can be better distributed across directories. Also, search engines and users can find content more easily.

Learn more from other pages. Because of this, most crawl simulations will not be able to discover these pages, so they’re easy to forget about. If they’re getting crawled by search engines, your log files will reflect this. And boy do search engines have a good memory – they rarely “forget” about URLs. You can then take action: for example include the orphaned pages in the site structure, redirect them, or remove them entirely.

In case you're wondering if that's it? No, further down this article we'll describe the most common log file analysis use cases in great detail. No sweat. Now, let's carry on!

What is a log file?

A log file is a text file containing records of all the requests a server has received, from both humans and crawlers, and its responses to the requesters.

Throughout this article, when we talk about “the request", we’re referring to the request a client makes to a server. The response the server sends back is what we’ll refer to as “the response”.

The kinds of requests that are logged

A log file lists requested pages (including those with parameters) but also includes assets such as custom fonts, images, JavaScript files, CSS files, and PDFs. You’ll also find requests for pages that are long gone – or have never existed. Literally every request is logged.

How requesting works

Before we continue, we need to talk about how these requests work.

When your browser wants to access a web pagePage

See Websites

Learn more on a server, it sends a request. Among other things, this request consists of these elements:

- HTTP Method: for example

GET. - URLURL

The term URL is an acronym for the designation "Uniform Resource Locator".

Learn more Path: the path to the resource that’s requested, for example/for the homepageHomepage

A homepage is a collection of HTML documents that can be called up as individual webpages via one URL on the web with a client such as a browser.

Learn more. - HTTP Protocol version: for example

HTTP/1.1orHTTP/2. - HTTP Headers: for example

user-agent string, preferred languages, and the referring URL.

Next, the server sends back a response. This response consists of three elements:

- HTTP Status Code: the three-digit response to the client’s request.

- HTTP Headers: headers containing for example the

content-typethat was returned and instructions on how long the client should cache the response. - HTTP Body: the body (e.g. HTML, CSS, etc.) is used to render and display the page in your browser. The body payload may not always be included – for example when a server returns a 301 status code.

Access logs

When logging is active on a web server, all the requests it receives are logged in a so-called access log file. These records typically contain information about each request received, such as the HTTP Status Code that the server returned and the size of the requested file. These access log files are typically saved in standardized text file formats, such as Common Log Format or Combined Log Format .

These access logs come straight from the source – the web server that received the request. Gathering logs becomes trickier if you’re running a large websiteWebsite

A website is a collection of HTML documents that can be called up as individual webpages via one URL on the web with a client such as a browser.

Learn more with a complex setup that uses for example:

- Load balancers

- Separate servers to serve assets (e.g.

assets.conductor.com) - A Content Delivery Network (CDN)

In practice, you’ll find that you need to pull logs from different places and combine them to get a complete picture of all of the requests that were made. You may also need to reformat some of the log files to make sure they’re in the same format.

CDN logs

Because of their decentralized nature and massive scale, it’s no small feat for CDNs to provide access to the access logs across all of their machines. However, some of the bigger CDN providers offer solutions:

- Cloudflare offers Cloudflare Logs , as part of their enterprise plan

- Akamai offers Log Delivery Service , as part of their DataStream product.

- AWS Cloudfront offers standard logging , as part of their standard platform.

If you’re not on Cloudflare’s enterprise plan, but you do want to gain access to log files, you can generate them on the fly using Cloudflare Workers . Cloudflare Workers are scripts that run on the CDN’s edge server (a server on the "edge of the CDN": the data center where the CDN connects with the internet, typically closest to the visitor), allowing you to intercept requests destined for your server. You can modify these requests, redirect them, or even respond directly.

Moving forward we’ll refer to the general concept of running scripts on a CDN’s edge as “edge workers”.

The possibilities for edge workers are endless. Besides generating log files on the fly, here are a few abilities that will help to illustrate their power:

- Adjust your robots.txt

- Implement redirects

- Implement X-Robots-Tag headers

- Change titles and meta descriptions

- Implement Schema markup

And the list goes on. It’s important to note though that using CDN workers adds a lot of complexity, as they’re yet another place where something can go wrong. Read more about mitigating these risks .

If you’re using the Cloudflare CDN, you can use their Cloudflare Workers to feed your logs to ContentKing . You can analyze your log files in real time directly in ContentKing, so you can see when your site is visited by search engines and how often.

A quick note on terminology

Moving forward, when we use the term “logs”, we may be referring to any of the following:

- Traditional access logs on Apache/nginx

- CDN access logs

- Logs constructed on-the-fly using CDN edge workers It’s important to note that logs constructed using edge workers are a stream of logs, rather than the traditional access log files.

The anatomy of a log file record

Now that we’ve got that out of the way, let’s get our hands dirty and view a sample record from an nginx access log. To give you some context, this record describes one request out of tens of thousands my personal website has received over the last month.

66.249.77.28 - - [25/May/2021:07:50:39 +0200] "GET / HTTP/1.1" 200 12179 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

Let’s dissect this record and see what we’re looking at:

66.249.77.28– the requester’s IP address.-– the first dash is hard-coded by default on nginx (which the record above comes from), but back in the day it was used to identify the client making the HTTP request. However, nowadays it is no longer used.-– the second dash is an optional field for user identification. For example, when requests to access HTTPAuth-protected URLs are made, you’ll see the username here. If no user identification is sent, the record will contain-[25/May/2021:07:50:39 +0200]– the date and time of the request."GET / HTTP/1.1"– the request; consisting of the HTTP Method (“GET”), the requested resource (“/” – the homepage) and the HTTP version used (“HTTP/1.1”).200– the HTTP response code.12179– the size in bytes of the resource that was requested. When redirected resources are requested, you’ll either see zero or a very low value here, as there is no body payload to be returned.“-”– had there been a referrer, it would have been shown here instead of-."Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"– the requester’s user-agent string , which can be used to identify the requester.

Logging configurations can vary significantly. You may also encounter the request time (how long the server spent processing the request) – and some go all-out and even log the full body response.

Logging configurations:

User-agent strings

A record contains the requester’s user-agent string, which can be used to help identify the requester. In the example above, we see the request was made by Google’s main crawler, called Googlebot.

Google has different crawlers for different purposes, and the same holds true for other search engines like Bing, DuckDuckGo, and Yandex.

🚨 Important

The user-agent strings that you find in your log files have nothing to do with the robots.txt user agent or the user agent used in robots directives to influence crawling, indexing, and serving behaviour.

However, well-behaving crawlers will have their unique identifier (e.g. Googlebot) present in all three to allow for easy identification.

Overview of user-agent strings

Common misconceptions around log file analysis

Log file analysis is unimportant for small websites

Those of you with small websites may be wondering by now whether there’s any value in log file analysis.

There definitely is, because it’s important to understand how search engines crawl your site. And how this results in indexed pages. Without log files, you’d just keep guessing. And if your website is important to your business, that’s too risky.

Log file analysis is a one-time thing

How often should you be performing log file analysis? Like many things in SEO, log file analysis is not a one-off task. It’s an ongoing process. Your website is constantly changing, and search engine crawlers are adapting to these changes. As an SEO, it’s your responsibility to monitor their behavior to make sure the crawling and indexing process runs smoothly.

Google Search Console’s Crawl Stats report is a replacement for log files

In November 2020 , Google revamped their “Crawl Stats” report in Google Search Console. But it’s still not a replacement for log files! While it is a big improvement compared to the previous Crawl Stats report, the new Crawl Stats report only contains information about Google’s crawlers – it only provides a high-level digest of Google’s crawl behavior. You can drill down in the dataset, but you’ll soon find you’re looking at sampled data.

If you can’t get your hands on log files, then the Crawl Stats report is of course useful, but it’s not meant to be a replacement for log files.

Google Analytics shows you search engines’ crawl behavior

In case you’re wondering why we haven’t brought up Google Analytics yet, that’s because Google Analytics does not track how search engine crawlers behave on your site.

Google Analytics aims to track what your visitors do on your site. Tracking search engine behavior, meanwhile, is a whole different ball game. And besides, search engine crawlers don’t execute the Google Analytics tracking code (or tracking code for other analytics platforms).

Where to find your log files

We now know what log files are, what different types there are, and why they’re important. So let’s move on to the next step and describe where to find them!

As we mentioned in the Access logs section, if you’ve got a complex hosting setup, you’ll need to take action to gather the log files. So, before you go looking for your log files, make sure you have a firm understanding of your hosting setup.

Web servers

The most popular web servers are Apache, nginx, and IIS. You’ll often find the access logs in their default location, but keep in mind that the web server can be configured to save them in a different location. Or, access logging may be disabled altogether.

See below for links to documentation explaining web server access log configuration, and where to find the logs:

Load balancers

CDNs

Log file history

Keep in mind that log files may only be kept for a short time, let’s say 7 to 30 days.

If you’re doing log file analysis to get an idea of how search engine behavior has changed over time, you’re going to need a lot of data. Maybe even 12–18 months’ worth of log files.

For most log file analysis use cases, we’d recommend analyzing at least 3 months’ worth of log files.

Filter out non-search-engine crawler records

When doing log file analysis for SEO, you’re only interested in seeing what search engine crawlers are doing, so go ahead and filter out all other records.

You can do this by removing all records made by clients that don’t identify as a search engine in their user-agent string. To get you started with a list of user-agent strings you’ll be interested in, here are Google's user-agent strings , and here are Bing's .

Be sure to also verify that you’re really dealing with search engine crawlers rather than other crawlers posing as search engine crawlers. We recommend checking out Google's documentation on this, but you can apply this to other search engines as well.

This process should also filter out any records containing personally identifiable information (PII) that could potentially identify specific individuals, such as for example IP addresses, usernames, phone numbers, and email addresses.

Use cases for log file analysis

Now, it’s time to go through the most common use cases for log file analysis to better understand how search engines behave on your site, and what you can do to improve your SEO performance. Even though we’ll primarily mention Google, all of the use cases can be applied to other search engines too.

Here are all of the use cases we'll cover:

- 1. Understand crawl behavior

- 2. Verify alignment on what’s important to your business

- 3. Discover crawl budget waste

- 4. Discover sections with most crawl errors

- 5. Discover indexable pages that Google isn’t crawling

- 6. Discover orphan pages

- 7. Keep tabs during a migration project

1. Understand crawl behavior

The best starting point for you is to first understand how Google is currently crawling your site. Enter the wondrous land of log files.

The goals here are as follows:

- Build foundational knowledge that’s required to make the most of the use cases we’ll cover below.

- Improve SEO forecasts by getting better at predicting how long it’ll take for new and updated content to start ranking.

1a. Build foundational knowledge

Browse through last week's logs. You’ll likely see:

- Requests to your robots.txt file — Google's refreshing the rules of engagement for your site.

- Requests to existing URLs that return the

200 OKstatus code — that’s Google recrawling pages. Absolutely normal and desired behavior. - Requests to assets such as custom fonts, CSS and JS files that return the

200 OKstatus codes. Google needs all of these to render your pages. Again, this is normal and desired behavior. - Requests to URLs that are long gone and return the

404 Not Foundstatus code. Google has a long memory, and they’ll keep retrying old URLs every once in a while. It can take years before they stop doing so. Nothing to worry about here either as long as you aren't linking to these URLs. - And you’ll find

301 Moved permanentlyHTTP status codes for URL-variants that redirect to preferred URLs. Perhaps your website contains some links to redirecting URLs or you had them in the past. And it’s highly likely others link to incorrect URLs on your site. Often this is beyond your control.

Next steps

There are no next steps. This use case is all about getting a rough idea of how Google is currently crawling your site.

1b. Improve SEO forecasts

Now, zoom in on recently published content — how long

after publishing did Google end up crawling the content?

Combining this information with when the content started driving organic traffic, and when it's firing on all cylinders can help you tremendously in SEO forecasting. For example, you may find something like:

- Published:

June 1, 2021 9:00 AM - First crawled:

June 2, 2021 02:30 AM - First organic traffic:

June 8, 2021 3:30 AM - Driving significant organic traffic:

June 22, 2021 3:30 AM

Do this for every new content piece, and categorize the results per content type. For example:

- Product category pages and blog articles take on average

one weekto start driving organic traffic, and takessix weeksto reach its full potential. - Product detail pages take on average

two weeksto start driving organic traffic, and takesfour weeksto reach its full potential.

When doing this at scale, you will find patterns—extremely useful input for SEO forecasting!

Next steps

If you feel Google's too slow to crawl new content, you can try the following:

- Analyze how often your XML sitemap gets crawled. If it's daily or a few times a week, you're fine. However, a few times a month is quite low. It's recommended that sites with more than

10,000 pages, split their XML sitemap into smaller ones and have a dedicated XML sitemap for new content to improve discovery of new content, so it can get indexed faster. Also, be sure to verify that your XML sitemap is valid to rule out any issues related to that. - Add more internal links to your new content — experiment with adding links to the main navigation, sidebar navigation, footer navigation, linking to it from related pages and parent pages.

- Build external links to your new content. This will speed up the entire process, and new content will be driving traffic in no-time. One caveat: this is easier said than done, we know.

- Still no luck? Then perhaps you're publishing more content than Google can crawl within your site's assigned crawl budget, or your crawl budget isn't used efficiently. Learn more about crawl budget here.

Improve discovery of new content

Add links to newly published content on your most frequently crawled pages to boost Google's discovery process.

2. Verify alignment on what’s important to your business

Perhaps Google's spending a lot of crawl budget on URLs that are irrelevant to you, neglecting pages that should be speerheading your SEO strategy.

The goals here is to find out if this is the case, and if so — to fix it.

Verify that:

- The

top 50most requested pages include your most important pages. - The

top 10directories receiving most requests are most important.

Next steps

Here's what you can do to realign Google with what's important to your business:

- Adjust your internal link structure to put more focus on your most important pages and site sections. Re-do your main navigation, sidebar navigation, footer navigation, in-page links — The Whole Shabang. Because your internal linking options are finite, you need to choose which pages to favor over others. Remember, if you link to every page on your site evenly they all become important, resulting in no pages being important.

- Build external links to the pages that matter most to you. Having external links pointing to these pages make for more entry points, and is a signal for Google to increase the crawl frequency too.

⚠️ XML Sitemap changfreq will not help

Now, you may think to leverage the changefreq field in your XML sitemap to realign their focus, but this field is (largely) ignored. Your best bet to fixing the alignment issue is to go through the steps we just discussed above.

3. Discover crawl budget waste

You want Google to use your site's crawl budget on crawling your most important pages. While crawl budget issues mostly apply to large sites, analyzing crawl budget waste will help you improve your internal link structure and fix crawl inefficiencies. And there's tremendous value in that.

Let's find out if Google's wasting crawl budget on URLs that are completely irrelevant, and fix it!

Follow up steps to discover crawl budget waste:

- Analyze the ratio between requested page URLs that do, and don't contain parameters. Oftentimes parameters are just used to slightly modify page content, and these pages aren't supposed to be indexed. If you don't want these pages indexed, then Google shouldn't be obsessing over them. You want to see that the vast majority (e.g.

95%) of requests are made to page URLs without parameters. - Analyze how frequently Google crawls assets that don't change that often. For example, if you're using custom fonts these don't need to be crawled multiple times a day.

- Are you seeing a lot of

301,302,307and308redirects? - Is Google adhering to your robots.txt directives? If you've disallowed certain sections because you know they're a waste of crawl budget, you want to make sure they keep out of there.

Next steps

Here's what you can do to reduce crawl budget waste:

- If you're finding that a significant amount of requests are made to page URLs with parameters, find out where Google learned about these URLs and discourage crawling through removing links and adding the

nofollowattribute to links. If this isn't enough, prevent Google from requesting these URLs with parameters by disallowing them in your robots.txt file. - If Google's crawling your assets way too much, see if your

Cache-ControlHTTP headers are properly configured. For example, you may be telling Google only to cache assets for an hour while the assets only change a few times a year. In this case, significantly increase the cache times. Learn more about Cache-Control . - If you're seeing a lot of

301,302,307and308redirects, you may have a lot of redirecting links in your site, leading to crawl inefficiencies. Update the links to point to the redirect's final destination URL directly to fix this. We often see this happen with links in the main navigation, and fixing those are generally easy and quick to tackle. Keep in mind that — despite falling in the3xxrange, a304isn't a typical redirect. It signals that the requested URL hasn't changed since the last time it was requested, and to use the one that's on file already. - If Google isn't adhering to your robots.txt directives, your directives may be incorrect (the most likely scenario) or Google is ignoring your directives. That's rare, but it happens. The best thing to do in this case is to post on the Google Search Help forum and to reach out to the ever-patient, cheese loving John Mueller . Don't waste his time though, do your homework to make sure Google is in fact ignoring your robots.txt directives.

4. Discover sections with most crawl errors

When Google's is hitting lots of crawl errors (4xx and 5xx HTTP status codes), they're having a poor crawl experience. Not only is this a waste of crawl budget, but Google can choose to stop their crawl too. And, it's likely visitors would have a similarly poor experience.

Let's find out where most crawl errors are happening on your site, and get them fixed.

To find which sections are having most crawl errors, pull up an overview of the amounts of 2xx, 4xx and 5xx responses per section and sort based on 4xx and 5xx. Now compare the ratio between 2xx on one hand and 4xx and 5xx errors on the other hand — which sections need the most TLC? Maybe you're dealing with a legacy forum, community section or blog. Whatever it is, by analyzing the crawl errors like this you'll learn whre most crawl errors are located.

Please note that you can't fix every 4xx issue. For example, if you removed a page that you no longer needed, you removed all internal links to it and it didn't make sense to redirect it then it'll 4xx. Google will request it less and less, but it may take years for them to stop crawling it entirely.

Next steps

To fix 4xx issues, you can do different things:

- Rebuild the page if it has value to visitors, allowing you to fix the

4xxissue and win back the value the previous page accumulated. - Redirect the URL to a relevant alternative page, passing on the authority and relevance.

- Remove links to the URL that returns

4xxissues to stop sending crawlers and visitors there.

Since most 5xx are typically application errors, you'll need to work together with your development and/or DevOps team on fixing these.

5. Discover indexable pages that Google isn’t crawling

Ideally, all of your indexable pages are frequently crawled by Google. The general consensus is that pages that get crawled frequently are more likely to perform well than those that are crawled infrequently.

So, let's find out which of your indexable pages are crawled infrequently — and fix that.

First, you need to have an overview of all of your indexable pages. Secondly, you need to enrich that dataset with information from your log files. You'll end up with a list of pages, and can then segment those.

For example indexable pages that were crawled:

- More than

eightweeks ago - More than

fourweeks ago - More than

twoweeks ago - More than

oneweek ago

You'll want to increase the crawl frequency for some of these pages, and for others you'll realize Google's treating them correctly. You may end up making these pages non-indexable, or even remove them because they don't carry any value.

Next steps

Here's what you can do to increase the crawl frequency for indexable pages that you care about:

- Verify that they are in your XML sitemap. If not, add them.

- Build more internal links.

- Update their content, and keep doing this regularly.

- Build more external links to them.

6. Discover orphan pages

Orphan pages are pages that have no internal links, and hence live outside of your site structure. Your SEO monitoring platform doesn’t find them because it likely only relies on link finding and your XML sitemap. Making these pages part of your site structure (again) can really help you in improve your site's SEO performance. Finding authoritative orphan pages is like finding the lost key to your wallet containing ten Bitcoin you bought in 2013.

Let's find if your website has orphan pages, and adopt them into your site structure.

Here's how to find the orphan pages:

- Pull up a list of all requested URLs.

- Filter out non-page URLs and non-indexable pages.

- Remove URLs with internal links (Contentking needed)

Next steps

Now, go through the list of orphan pages and analyze whether they have value:

- Are they getting (organic) traffic?

- Do they have external links pointing to them?

- Do they contain useful content for your visitors?

And then decide their fate:

- If you want to keep the pages, make them part of your site structure, update the content and add internal links to related pages.

- If you don't want to keep the pages and they don't carry any value, just remove them. If they do carry value, 301 redirect them to your most relevant page.

7. Keep tabs during a migration project

During migrations, keeping tabs on Google's crawling behavior is important for two reasons:

- In preparation of the migration, you need to create a redirect plan that includes your most important URLs and where they should redirect to.

- After you've launched your changes, you need to know whether Google's crawling your new URLs and how they're progressing. If something is holding them back, you need to know pronto.

7a. Complete redirect plan

To verify whether your redirect plan is complete, pull up your most frequently crawled URLs and cross-reference it with your redirect plan.

Next steps

If you've found some URLs to be missing from your redirect plan, add them.

7b. Monitor Google's crawl behavior

To verify Google is crawling your new URLs, go through the steps in use case 5. Discover indexable pages that Google isn’t crawling.

Next steps

Migrations always shake things up — Google needs to learn about your new site structure, content changes and so on. It always take time for them to adjust, but if you're finding they're taking too long, here's what you can do to speed up the crawling process for new URLs that haven't been crawled yet:

- Tweak your internal link structure so Google is more likely to crawl these new URLs.

- Add a separate XML sitemap containing all of the new URLs.

- Building external links to the new URLs (yes, this is easier said than done — we know).

The importance of ongoing monitoring

Your website is never done, and SEO is never done either. If you want to win at SEO, you'll always be tweaking your website.

With all of the changes you continuously make, it's important to make sure log file analysis is part of your ongoing SEO monitoring efforts. Go through the use cases we've covered above, and set up alerts in cases your log files show abnormal behavior from Google.

Your log files are the only way to learn about Google's true behavior on your site. Don't leave money on the table, leverage insights from log file analysis.