"Indexed, though blocked by robots.txt": what does it mean and how to fix?

“Indexed, though blocked by robots.txt” indicates that Google indexed URLs even though they were blocked by your robots.txt file.

Google has marked these URLs as “Valid with warning” because they’re unsure whether you want to have these URLs indexed. In this article you'll learn how to fix this issue.

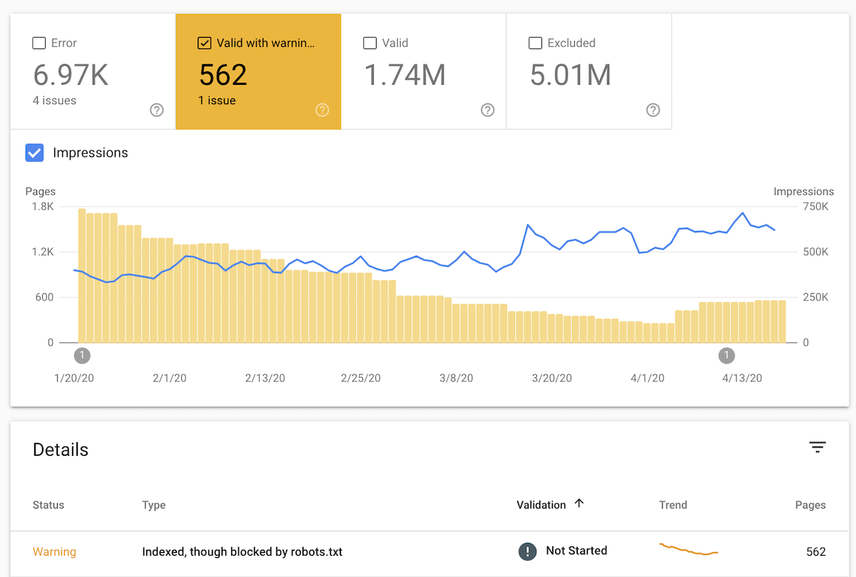

Here’s what this looks like in Google Search Console’s Index Coverage report, with the amount of URL impressions shown:

Double-check on URL level

You can double-check this by going to Coverage > Indexed, though blocked by robots.txt and inspect one of the URLs listed.

Then under Crawl it'll say No: blocked by robots.txt for the field Crawl allowed and Failed: Blocked by robots.txt for the field Page fetch.

So what happened?

Normally, Google wouldn’t have indexed these URLs but apparently they found links to them and deemed them important enough to be indexed.

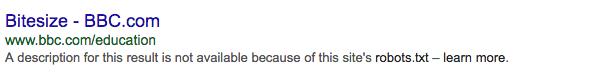

It’s likely that the snippets that are shown are suboptimal, such as for instance:

How to fix “Indexed, though blocked by robots.txt”

- Export the list of URLs from Google Search Console and sort them alphabetically.

- Go through the URLs and check if it includes URLs…

- That you want to have indexed. If this is the case, update your robots.txt file to allow Google to access these URLs.

- That you don’t want search engines to access. If this is the case, leave your robots.txt as-is but check if you’ve got any internal links that you should remove.

- That search engines can access, but that you don’t want to have indexed. In this case, update your robots.txt to reflect this and apply robots noindex directives.

- That shouldn’t be accessible to anyone, ever. Take for example, a staging environment. In this case, follow the steps explained in our Protecting Staging Environments article.

- In case it’s not clear to you what part of your robots.txt is causing these URLs to be blocked, select an URL and hit the

TEST ROBOTS.TXT BLOCKINGbutton in the pane that opens on the right hand side. This will open up a new window showing you what line in your robots.txt prevents Google from accessing the URL. - When you’re done making changes, hit the

VALIDATE FIXbutton to request Google to re-evaluate your robots.txt against your URLs.

Indexed, though blocked by robots.txt fix for WordPress

The process to fixing this issue for WordPress sites is the same as described in the steps above, but here are some pointers to quickly find your robots.txt file in WordPress:

WordPress + Yoast SEO

If you’re using the Yoast SEO plugin, follow the steps below to adjust your robots.txt file:

- Log into your

wp-adminsection. - In the sidebar, go to

Yoast SEO plugin>Tools. - Go to

File editor.

WordPress + Rank Math

If you’re using the Rank Math SEO plugin, follow the steps below to adjust your robots.txt file:

- Log into your

wp-adminsection. - In the sidebar, go to

Rank Math>General Settings. - Go to

Edit robots.txt.

WordPress + All in One SEO

If you’re using the All in One SEO plugin, follow the steps below to adjust your robots.txt file:

- Log into your

wp-adminsection. - In the sidebar, go to

All in One SEO>Robots.txt.

If you're working on a WordPress website that hasn't launched yet, and can’t wrap your head around why your robots.txt contains the follow:

User-agent: *Disallow: /

Then check your settings under: Settings > Reading and look for Search Engine Visibility.

If the box Discourage search engines from indexing this site is checked, WordPress will generate a virtual robots.txt preventing search engines from accessing the site.

Indexed, though blocked by robots.txt fix for Shopify

Shopify doesn’t allow you to manage your robots.txt from their system, so you’re working with a default one that’s applied to all sites.

Perhaps you’ve seen the “Indexed, though blocked by robots.txt” message in Google Search Console or received a “New index coverage issue detected” email from Google about it. We recommended to always check out what URLs this concerns, because you don’t want to leave anything to chance in SEO.

Review the URLs, and see if any important URLs are blocked. If that’s the case, you’ve got two options which require some work, but do allow you to change your robots.txt file on Shopify:

Whether or not these options are worth it to you depends on the potential reward. If it’s sizable, look into implementing one of these options.

You can take the same approach on the Squarespace platform.

FAQs

Why is Google showing this error for my pages?

Google found links to pages that aren't accessible to them due to robots.txt disallow directives. When Google deems these pages important enough, they'll index them.

How do you fix this error?

The short answer to that, is by making sure pages that you want Google to index should just be accessible to Google's crawlers. And pages that you don't want them to index, shouldn't be linked internally. The long answer is described in the section "How to fix 'Indexed, though blocked by robots.txt'" of this article.

Can I edit my robots.txt file on WordPress?

Popular SEO plugins such as Yoast, Rank Math and All in one SEO for example allow you to edit your robots.txt directly from the wp-admin panel.